How to Debug AI Traffic Using Moesif

We strive for predictable, controllable systems. We demand precise understanding of resource utilization, performance characteristics, and failure modes for our AI apps. An ideal AI-powered application behaves like any other well-instrumented component of the architecture—transparent, debuggable, and optimizable.

But integrating with AI APIs, particularly from third-party providers, often brings with it an unwelcome element of opacity. For example, without clear visibility, token counts can become a cost concern. You might observe latency spikes without obvious causes. API-specific errors emerge, leaving you to decipher cryptic messages. This black box behavior, contradicting your engineering principles, creates friction, impedes product growth, and increases operational risk. Forcing to guess instead of knowing doesn’t bode well for driving innovation forward, which adoption of artificial intelligence should help us achieve.

With Moesif, you can transform the unpredictable “black box” into a manageable, engineering-grade system. In this article, using practical examples for a GenAI API, we’ll demonstrate how Moesif delivers the observability you need for your AI product. We’ll also cover the basics of AI traffic debugging, discussing the key concepts behind the process.

Table of Contents

- Understanding AI Traffic

- Challenges in Debugging AI Traffic

- Key Metrics To Track

- How to use Moesif to Debug AI Traffic

- Conclusion

- Next Steps

Understanding AI Traffic

To put simply, AI traffic refers to the interactions between your application and an AI model’s API, as well as the exchanged data. AI traffic is similar to web traffic, for example HTTP requests and responses, but one that represents interactions with an AI model:

- You send HTTP requests to an AI API defining the prompts, parameters (for example, maximum number of tokens), and contextual data.

- The API returns the generated responses—text, images, and so on.

You can consider each API call and its payload constituting a unit of AI traffic.

An HTTP request may look like this:

{

prompt:"Explain quantum computing in simple terms"

max_tokens:100

context_size:50

model:"gpt-3.5-turbo"

}

And the response might be similar to the following:

{

"generated_text": {

"object": "chat.completion",

"usage": {

"completion_tokens": 9,

"total_tokens": 47,

"prompt_tokens": 38

},

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Quantum computing is like having a super-fast computer that can think about many things at once. Instead of binary bits, it employs 'qubits' that can hold both 0 and 1 at the same time. This allows it to solve complex problems much faster than regular computers."

},

"finish_reason": "length",

"logprobs": null

}

],

"id": "chatcmpl-xdK15hflCCsjhXJ79toh8zPKSUR",

"created": 1740480690

}

}

In a traditional production application, the API responds to incoming requests by making database queries. You have a well-defined contract and predictable behavior. AI traffic, on the other hand, involves interactions with a probabilistic system: an AI model. This means the responses aren’t always deterministic. You have to therefore approach differently to effectively analyze and debug AI products.

Key Concepts and Terminology

Let’s briefly go over the key concepts that relate to debugging AI traffic and how they matter.

Tokens

Tokens act as the fundamental units that AI models use to process text. Instead of processing entire words or sentences, models break down the textual input into tokens. Depending on the model and its tokenization process, tokens can be words, parts of words, or even punctuation marks. For example, an AI model might break down the sentence “Hello, Moesif!” into these four tokens:

Hello,Moesif!

Token usage directly correlates with the cost of using most AI APIs dealing with text. Many APIs build their pricing based on the number of tokens they process—both for input and output.

When debugging AI traffic, monitoring token counts can help you identify inefficient prompts, optimize your application’s resource consumption, and manage expenses without unexpected surprises. For example, if you observe high token usage, it may indicate overly verbose prompts or incorrect model settings.

Prompt

Prompts are the inputs you provide to an AI model. If you can design and refine the inputs (prompts) you send to an AI model, you can produce reliable, relevant, and high-quality outputs while maintaining minimum token usage.

Understanding how to write effective prompts can help you construct a guideline for the end users that they can follow to get the best out of your app’s services. It may also help you understand how customers use your application and how models perform, along with the rest of your infrastructure, by correlating prompt details with token usage and other data.

Model Parameters

These parameters control the behavior of the AI model during response generation. For example:

Temperature- Controls the randomness of the output. Higher values produce more creative but potentially less coherent results. Lower values make the output more deterministic and focused.

max_tokens- Sets a hard limit on the number of tokens the model can generate in its response. Setting a maximum limit can prevent runaway generation and control costs.

Incorrect parameter settings can lead to unexpected outputs, excessive token consumption, or truncated responses. By monitoring these parameters alongside the model’s output, you fine-tune the model’s behavior for specific use cases.

Challenges in Debugging AI Traffic

To effectively debug AI traffic, you can’t apply the same perspective and strategies like in traditional web applications, the latter of which involves well-established tools and techniques. We have decades of experience with predictable request-response cycles, deterministic behavior, and code you can readily inspect.

On the contrary, integrating AI services introduces a fundamentally different set of challenges. These challenges stem from the probabilistic nature of AI models, the “black box” effect as we’ve stated already, and the unique cost and performance considerations of AI APIs.

Traditional web requests are largely deterministic. The same input to the same endpoint, under the same conditions, generally produces the same output. This predictability, right from the get-go, simplifies debugging. AI model responses, however, are probabilistic. Even with the same prompt, you might get slightly different outputs each time, for example, with higher temperature settings. This inherent randomness makes it harder to reproduce issues and pinpoint the root cause.

You have full access to your application’s source code. You can, for example, use debuggers to step through the code, inspect variables, and understand the exact execution flow. The other part of the picture, however, involves interacting with AI models through an API. You can’t see the internal workings of the model, even if you’ve trained a custom model yourself for your use case. This opacity makes it difficult to understand why a model produces a particular output or why an error occurred. You must capture as much details as possible on these interactions, and possess the advanced analytics tools to analyze them, to countermeasure the opacity and achieve effective debugging.

Then consider the pricing and cost model. Web API costs often relate to the number of requests or bandwidth you use. You can track and predict them in a relatively straightforward manner. In contrast, many AI APIs have a cost model based on tokens. Inefficient prompts or model settings can incur unexpectedly high token usage and costs. So you must accurately track and meter token usage (prompt, completion, total, and so on).

When analyzing performance and latency issues in AI apps, it’s important to keep in mind that the issues can originate from multiple sources: your network connection, the AI provider’s infrastructure, or the model itself. To isolate the bottleneck, you must carefully analyze timing data along and leverage profiling tools for trace data.

Lastly, consider the different errors. In traditional web traffic, error codes and messages are well-defined and documented. However, AI APIs can return a variety of errors, including ones that are specific to the model or provider. You must consolidate and contextualize these errors to better understand them for debugging purposes.

Key Metrics To Track

Now let’s discuss the key metrics you need to track to effectively debug AI traffic:

Token Usage

As already mentioned, token usage directly impacts the cost. Therefore, monitoring their usage comes as a no-brainer.

Usually, the AI API reports the number of tokens associated with each API interaction in the responses. For example, in the example response we’d demonstrated earlier, the usage field contains the detailed token usage counts:

prompt_tokensrepresents the number of tokens in the input.completion_tokensrepresents the number of tokens in the generated response.total_tokensgives the sum ofprompt_tokensandcompletion_tokens.

Latency or Response Time

This measures the total time it takes for an AI API request to complete, from sending the request to receiving the full response. Ideally, you should break it down into the following components if possible:

- Network latency

- Time spent in transit over the network.

- AI processing time

- Time spent within the AI provider’s infrastructure.

You can easily achieve this by instrumenting your app with something like OpenTelemetry. And with Moesif’s OpenTelemetry integration, you will have the trace data available with the rest of the analytics data in Moesif.

Error Rates and Breakdowns

You must also track the frequency of errors, types of errors, and their occurrence across different endpoints. High error rates can point towards a number of issues:

- The requests, for example, invalid input and exceeding rate limits

- Authentication issues

- Problems with the AI service itself

Analyzing errors from different perspectives helps pinpoint the root cause.

Request and Response Details

This refers to the complete content of the data you send to (HTTP request) and receive from (HTTP response) the AI model:

- The request method

- The HTTP headers

- The payload or body

Inspecting these details and analyzing them can give you significant insights for debugging. For example, you can verify that the request body doesn’t have incorrect formatting and understand the generated output. More often than not, the payloads have important data like the following:

- AI model information

- Token usage

- Rate limit information

Model Identifier

This identifies the specific AI model that processes the request, for example, gpt-3.5-turbo-0613, gpt-4-0314, and claude-2.

Different models have different performance characteristics, cost structures, and potential failure modes. Therefore, having the model identifier allows you to compare performance across models, identify model-specific issues, and make sure you’re using the intended model.

User and Session Identifiers

If your application serves multiple users or has distinct sessions, you might want to track a unique identifier like user ID, session ID, and company ID for each AI API request. It allows you to associate API calls with specific customers.

Custom Metadata

Custom metadata allows you to attach any additional application-specific information to your AI API requests like the following:

- Feature flags

- Experiment IDs

- Task types

- API version

Custom metadata provides valuable context for analyzing your AI traffic. For example, you can track which feature flag a particular request enabled, or which A/B testing group a user belongs to.

Usage and Billing

Lastly, we recommend you track usage and the associated revenue or cost. This allows you to confidently allocate resources and grow your product.

Considerations For Image, Video, and Audio Generation

When you want to debug AI products that generate images and videos, you have to look at a set of slightly different metrics. Instead of token usage, you have to focus on the content itself—quality, resolution, duration, and so on.

For both images and videos, these are the defining metric types for the generated output:

- Dimension (width and height)

- Resolution (pixels per inch or dots per inch)

- Duration

For audio, you might also consider the sample rate or bitrate, along with duration.

How to use Moesif to Debug AI Traffic

Moesif provides a robust set of tools to effectively track, collect, and observe AI traffic metrics so you can debug them with ease and accuracy. The following sections demonstrate some example scenarios of debugging AI traffic for a GenAI-based product. The product uses a GenAI API to generate text, image, and video. It also allows training AI models, as well as generating embeddings.

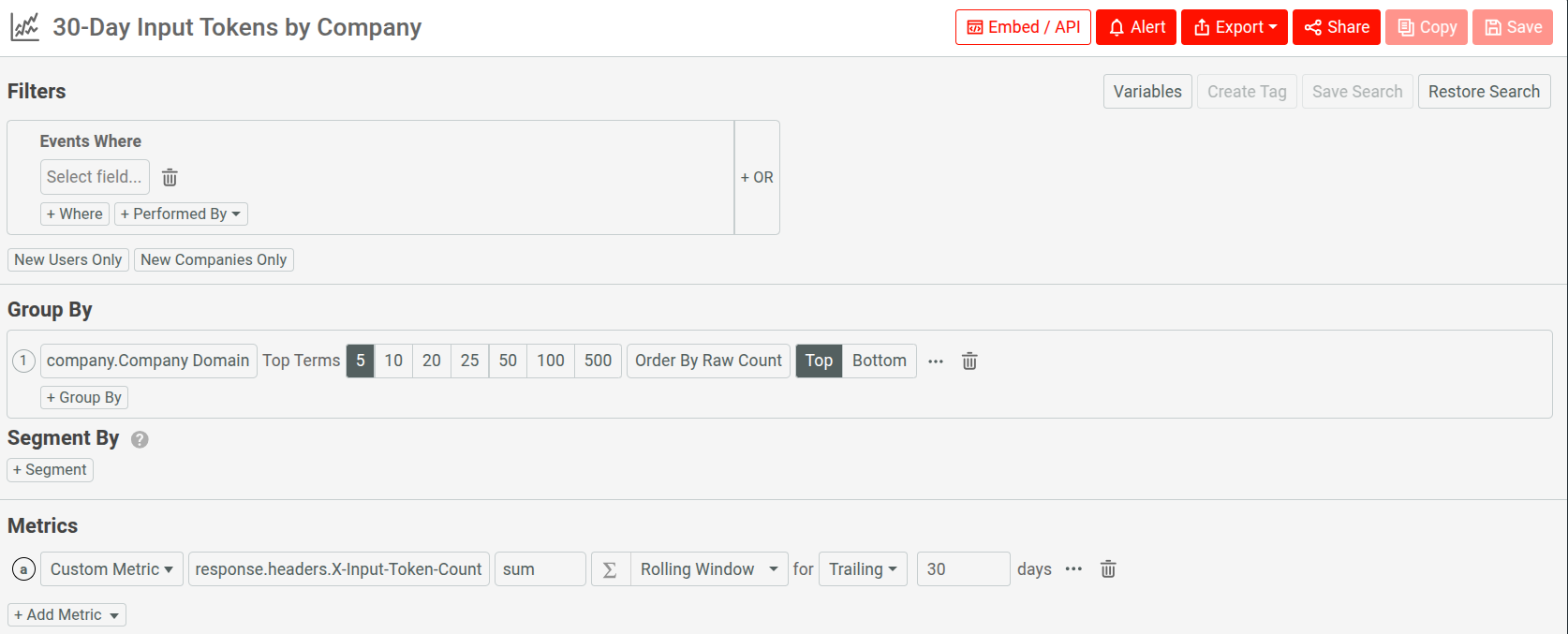

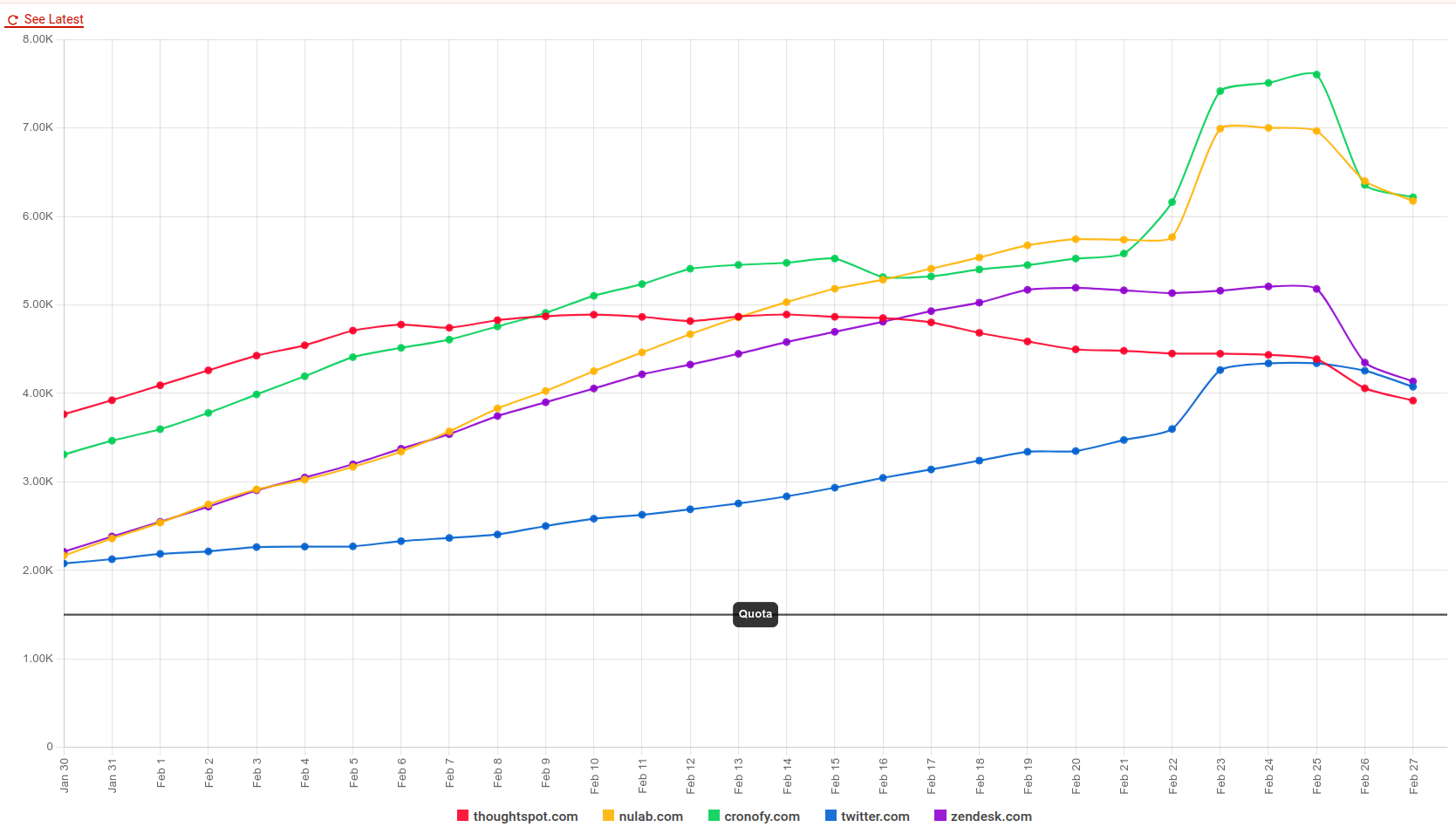

Understand Token Usage

You can use Moesif to understand and look at token usage in many ways. For example, here we look at input token consumption for different companies for the past 30 days against a maximum quota of 1.5k tokens:

You can analyze similarly with output tokens as well, for example, for evaluating performance.

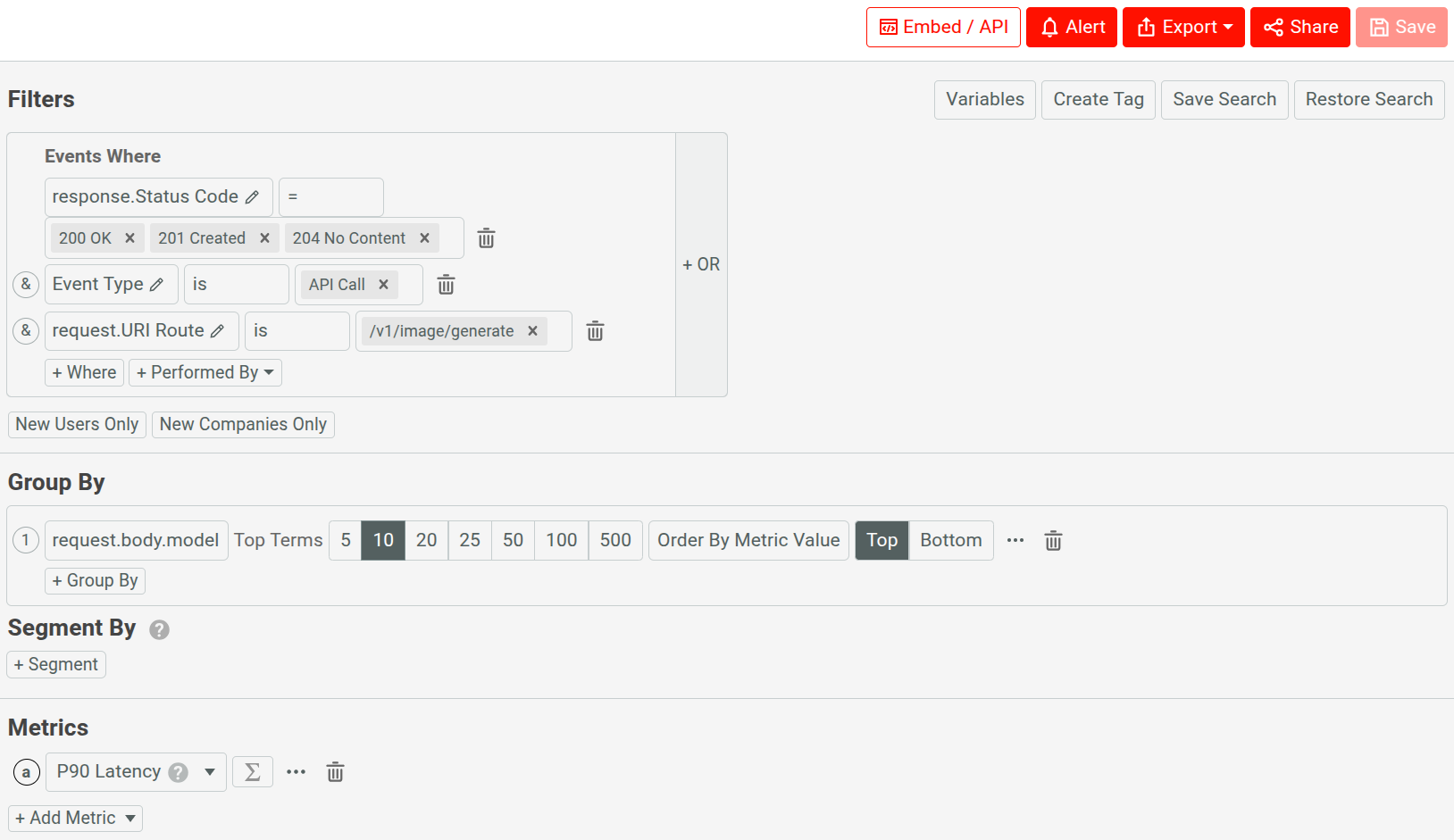

Analyze Performance

Many AI APIs require you to specify the AI model in your request. It can also reside in request or response headers, or in custom metadata of your application. Since Moesif captures request and response details, and allows you to add custom metadata, you have AI model data available to you through different means. This allows you to perform comparative analysis of AI models for different metrics.

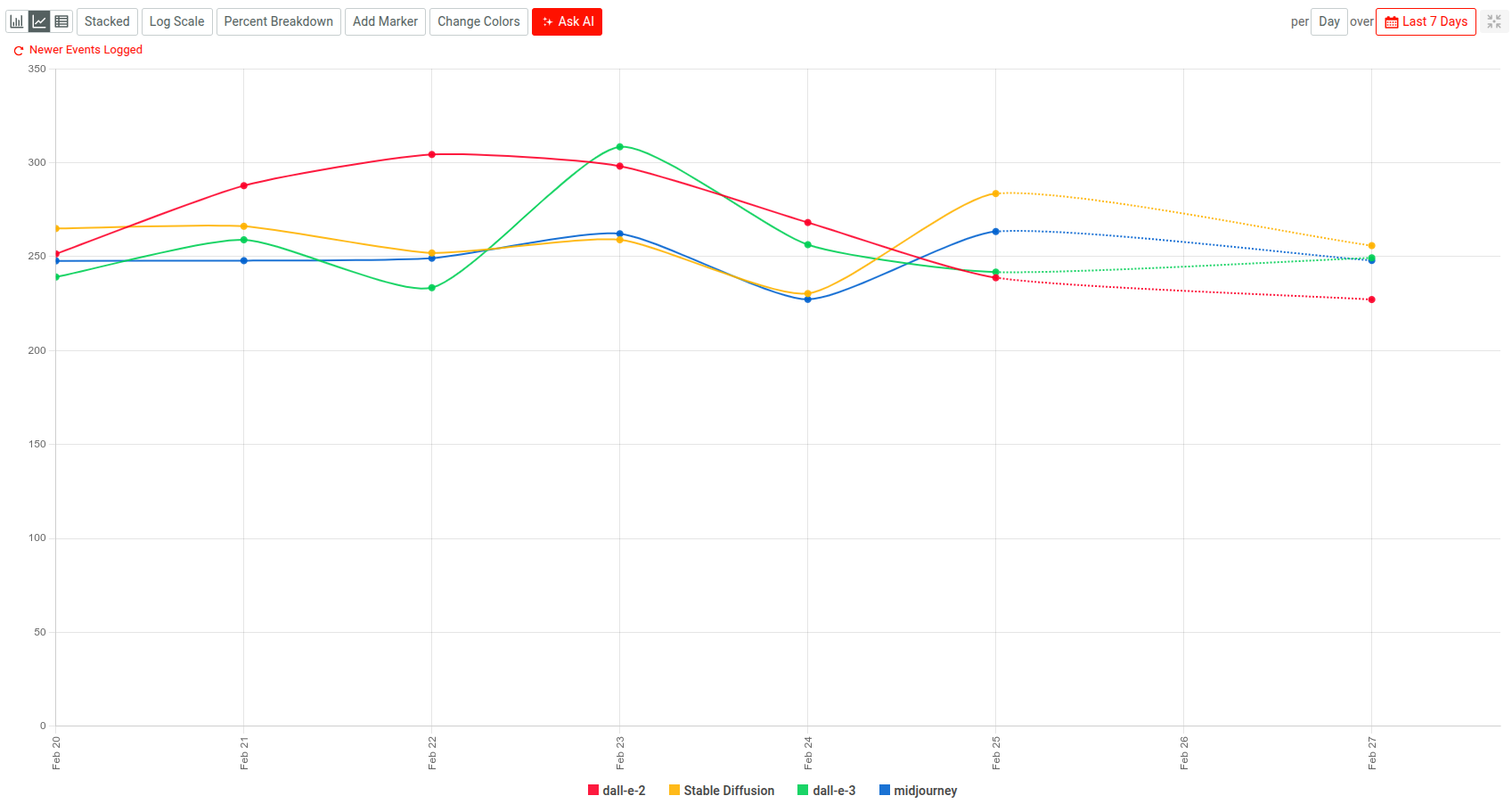

For example, you can use Moesif Time Series analysis to compare performance of AI models. The following chart illustrates a P90 latency analysis for image generation across different models:

As you can observe, the Dall-E 2 model performs better and more consistently than other models for image generation.

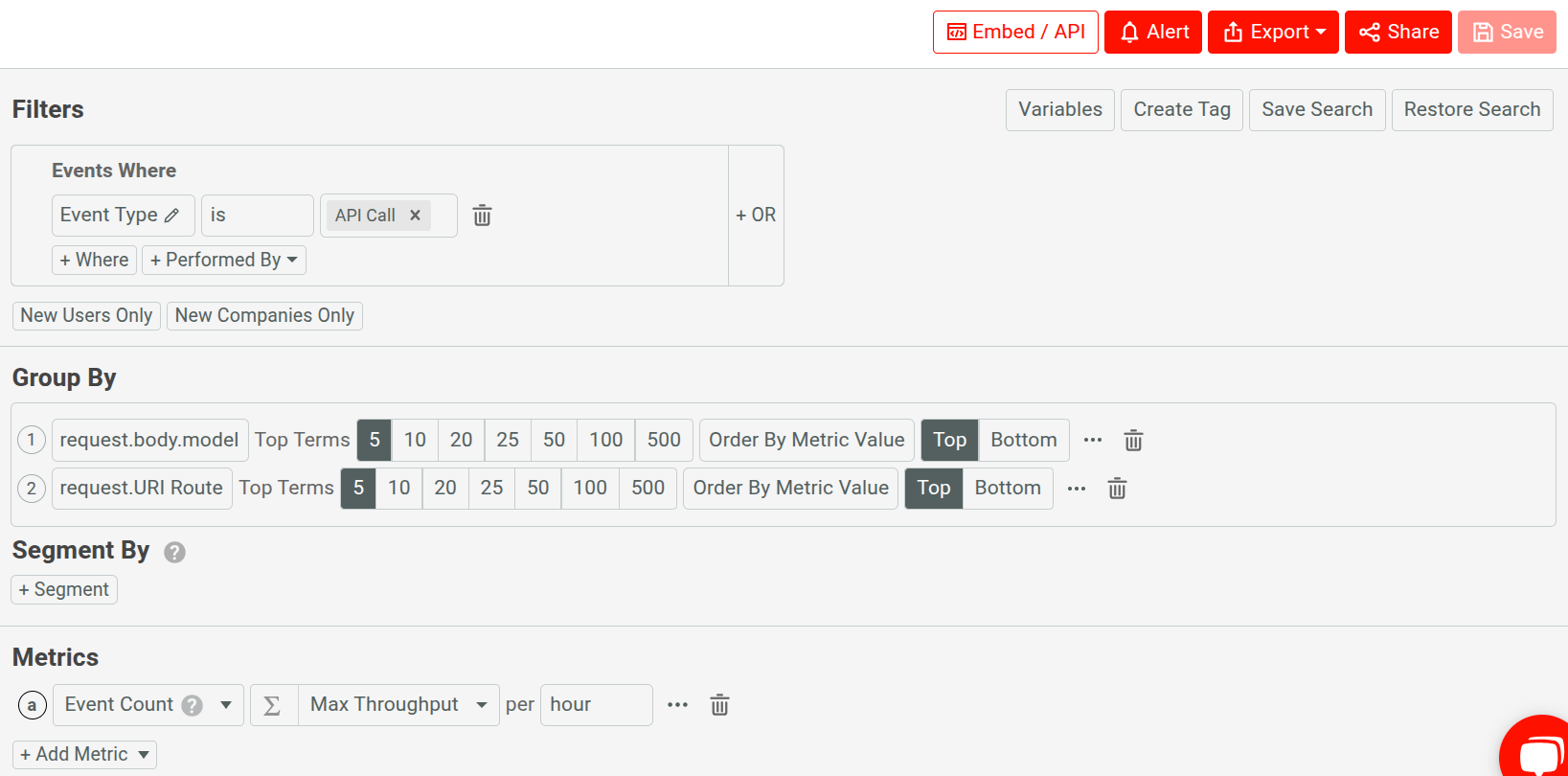

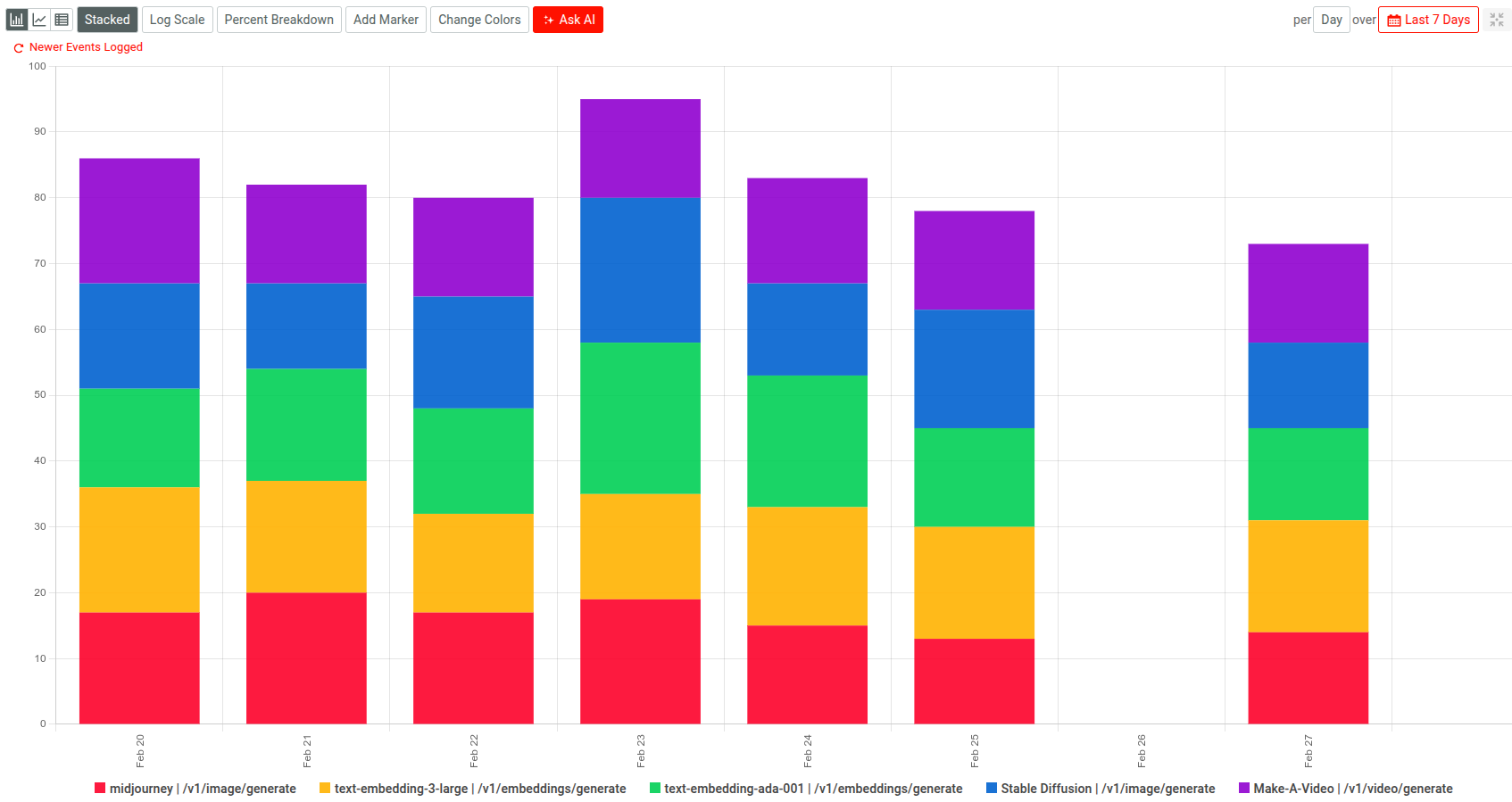

Moesif offers flexibility, customizability, and a collection of built-in functions to define your custom metric for performance. For example, here we analyze throughput of API calls for the past 7 days across different AI models and API endpoints, visualizing it in a stacked bar chart:

Analyze Errors

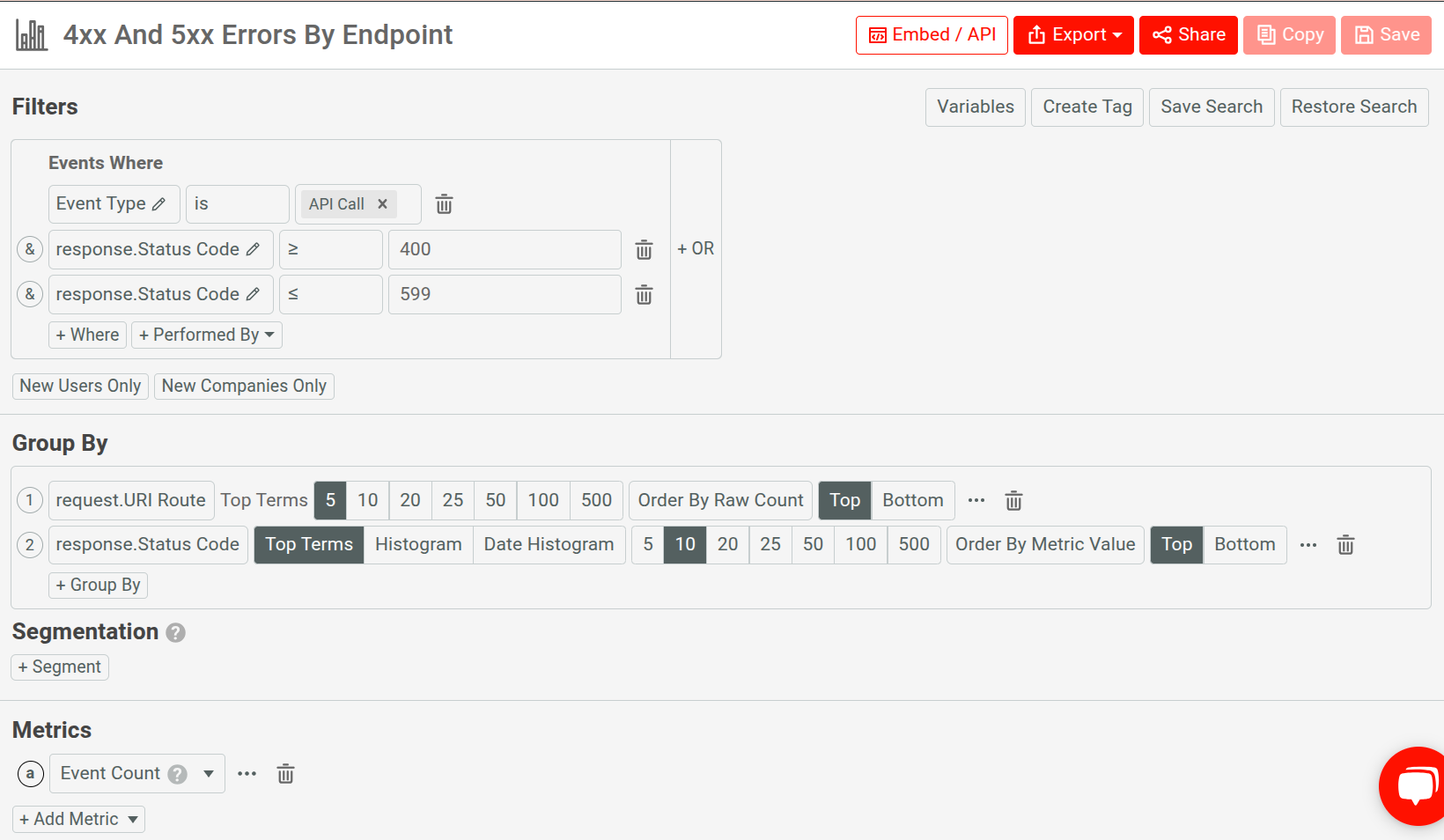

Moesif’s segmentation and group features can break down different metrics across entities. For example, you can plot a bar chart for all 4xx client errors and 5xx server errors across endpoints.

Being able to visualize errors like this allows you to debug more efficiently, identify volatile resources, and address pain points of your customers.

Enhance AI Observability With OpenTelemetry

As we’ve discussed already, the opacity of AI apps impedes the ideal visibility for effective debugging. To alleviate that, one of the strategies we recommend is instrumenting your apps with observability frameworks like OpenTelemetry. If you’ve already done that, after you integrate Moesif, you’ll have the trace data available to you in the Moesif platform. This vastly improves your observability, troubleshooting, and analysis. You have a unified platform giving you greater context around all the analytics data, tools, and traces.

See the following resources to learn how OpenTelemetry and Moesif can give you better observability for your AI apps:

- Achieve API Traceability with Moesif and OpenTelemetry

- Integrate OpenTelemetry with Moesif in your Node.js app

Track Usage And Cost

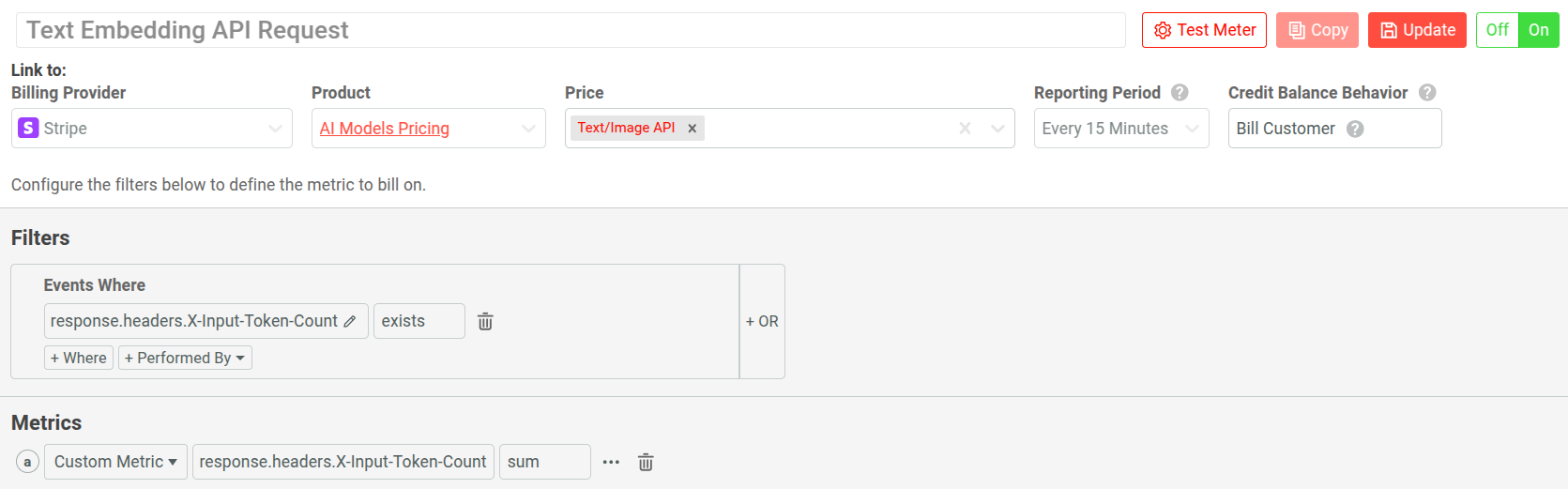

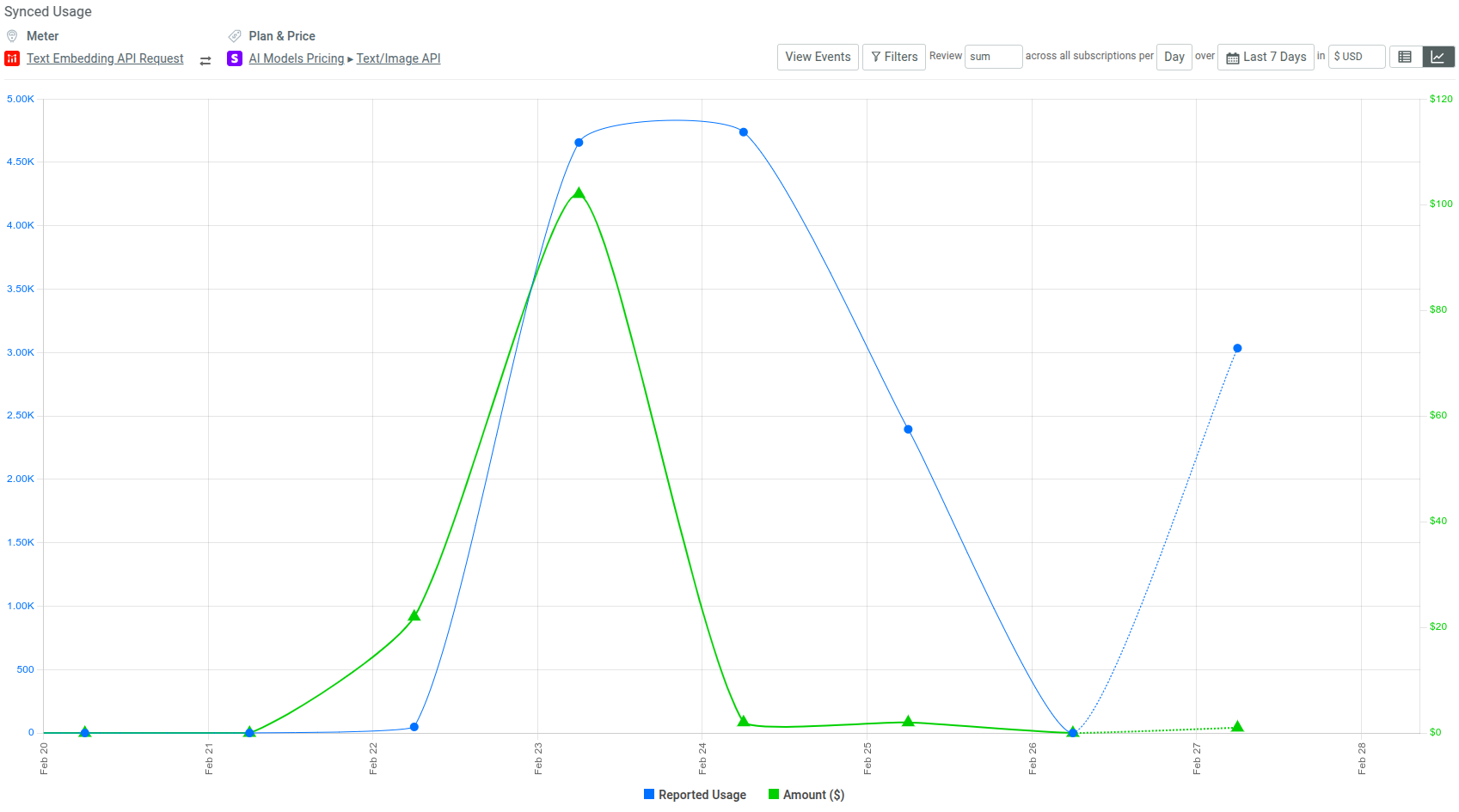

When it comes to cost and revenue, analyzing token usage paints only half of the picture. Thanks to Moesif’s Billing Meters, you can accurately define how you want that usage to tie to the monetary side of things.

Moesif supports popular billing providers like Stripe out-of-the-box. You can have your custom billing solution where Moesif accurately tracks, meters, and reports usage. Then you can leverage Billing Report Metrics to get detailed usage reports for usage-based cost or revenue.

For example, here we define a billing meter, charging customers based on their input prompts:

Moesif, in real time, tracks the usage and associated cost or revenue for this billing meter.

Conclusion

Unfortunately, simply knowing which metrics to track for debugging AI traffic doesn’t suffice. You need a powerful, unified platform to collect, analyze, and visualize this data in a way that’s actionable.

With Moesif, you can quickly achieve comprehensive observability for your AI apps with minimal setup. Moesif aims to provide a customer and product-centric analytics platform for modern products, solving the unique challenges that come with them. Moesif offers much more than we’ve been able to demonstrate within the scope of this article.

If you want to see for yourself how Moesif can transform the opaque world of AI interactions into a transparent and manageable system, sign up today for a free trial, no credit cards required.

Next Steps

- Get started with Moesif

- Integrate Moesif with your platform of choice.

- Use Moesif to understand revenue and cost of API usage.