Best Practices for API Rate Limits and Quotas with Moesif to Avoid Angry Customers

Like any online service, your API users expect high availability and good performance. This also means one customer should not be able to starve another customer’s access to your API. Adding rate limiting is a defensive measure which can protect your API from being overwhelmed with requests and improve general availability. Certain API requests are rate limited to manage usage effectively. Similarly, adding quota management also ensures customers stay within their contract terms and obligations ensuring you’re able to monetize your API. Software applications are used to monitor API requests and enforce rate limits, ensuring optimal system performance. This is even more important for Data and GenAI APIs where cost of an API can be high and part of your COGS (Cost of Goods Sold). Without quota management, a customer could easily use far more resources than their plan allows even if they stay within your overall server rate limits. Yet, incorrect implementations can cause customers to become angry due to their requests not working as expected. Worst, a bad rate limiting implementation could fail itself causing all requests to be rejected. The 429 error is a common result of such failures, indicating that too many requests have been sent in a given amount of time. This guide walks through different types of rate limits and quotas. Then, it walks through ways to set up rate limiting that protects your API without making customers angry.

Introduction to API Management

API management is a crucial aspect of ensuring the smooth operation and security of Application Programming Interfaces (APIs). It involves a set of processes and tools that enable organizations to create, manage, and analyze APIs in a secure and scalable manner. One key component of API management is rate limiting, which controls the number of requests an API can handle within a certain time period. Implementing API rate limiting is essential to prevent excessive usage, denial of service attacks, and to ensure fair usage of API resources. By setting appropriate rate limits, API providers can protect their APIs from abuse, prevent server overload, and maintain reliable performance.

Understanding API Rate Limits and Quotas

API rate limits and quotas are essential mechanisms that servers use to control the number of requests clients can make within a specific time frame. Rate limits set the maximum number of requests allowed in a short period, such as per second or minute, while quotas define the total number of requests permitted over a longer duration, like per hour, day, or month. Rate limits can also be set for individual users to ensure fair access to resources. A fixed number of requests are allowed in specified time intervals to manage request flow. These controls are vital for preventing abuse, maintaining optimal server performance, and ensuring fair resource allocation among all users.

How do Rate Limit and Quotas Work

Rate limits and quotas are two related concepts in API management that work together to control API usage. A rate limit defines the maximum number of requests an API can handle within a given time period, while a quota specifies the total number of requests allowed within a certain timeframe. When a user exceeds their quota or rate limit, they receive an error message indicating that they have reached their limit. API providers use rate limiting algorithms, such as token bucket or leaky bucket algorithms, to enforce rate limits and prevent excessive usage. By setting quotas and rate limits, API providers can manage API traffic, prevent abuse, and ensure fair usage of API resources.

How do rate limit and quotas works

Both quotas and rate limits work by tracking the number of requests each API user makes within a defined time interval and then taking some action when a user exceeds the limit, which helps in managing traffic to ensure optimal performance. This action could be a variety of things such as rejecting the request with a 429 Too Many Requests status code, sending a warning email, adding a surcharge, among other things. Rate limiting algorithms throttle requests that exceed a set threshold to enforce limits, thereby ensuring system stability. Just like different metrics are needed to measure different goals, different rate limits are used to achieve different goals.

Rate limits vs quota management

There are two different types of rate limiting, each with different use cases. Short term rate limits are focused on protecting servers and infrastructure from being overwhelmed within a short period of time. Whereas, long term quotas are focused on managing the cost and monetization of your API’s resources. Additionally, there are other techniques, such as utilizing client-side JavaScript, that individuals may employ to circumvent restrictions on API usage.

Rate limits

Short term rate limits, a type of API rate limit, look at the number of requests per second or per minute within a short period of time and help “even out” spikes and bursty traffic patterns to offer backend protection. Because short term rate limits are calculated in real-time, there is usually little customer-specific context. Instead, these rate limits may be measured using a simple counter per IP address or API key. API rate limits play a crucial role in maintaining the stability and security of servers. By capping the number of requests, servers can mitigate the risk of excessive traffic that could lead to performance degradation or even denial-of-service (DoS) attacks. Preventing unnecessary requests is essential to maintain stability and performance, as it helps in reducing the load on the server and ensures optimal resource utilization. These limits ensure that resources are distributed fairly, preventing any single user from monopolizing the server’s capacity.

Example use cases for rate limits:

- Protect downstream services from being overloaded by traffic spikes

- Increase availability and prevent certain against DDoS attacks from bringing down your API

- Provide a time buffer to handle capacity scaling operations

- Ensure consistent performance for customers and even out load on databases and other dependent services

- Reduce costs due to uneven utilization of downstream compute and storage capacity.

Identifier

Due to their time sensitivity, short term rate limits need a mechanism to identify different clients without relying heavily on external context. Some rate limiting mechanisms will use IP addresses, but this can be inaccurate. For example, some customers may call your API from many different servers. A more robust solution may use the API key or the user_id of the customer.

Scope

Short term rate limits can be either scoped to the server or a distributed cluster of instances using a cache system like Redis. You can also use information within the request such as the API endpoint for additional scope. This can be helpful to offer different rate limits for different services depending on their capacity. For example, certain services may be very costly to service and can be easily overwhelmed such as launching batch jobs or running complex queries on a database.Short term rate limits can be imperfect given their real-time nature which makes them a poor form for billing and financial terms, but great for backend protection.

Quota management

Unlike short term rate limits, the goal of quotas are to enforce business terms such as to monetize your APIs and protect your business from high cost overruns by customers. Quotas can be managed using a fixed rate of requests to ensure consistent performance and adherence to contract terms. They measure customer utilization of your API over longer durations such as per hour, per day, or per month. Managing API call frequency is crucial to avoid exceeding limits, which can lead to added costs and operational issues. Quotas are not designed to prevent a spike from overwhelming your API. Rather, quotas regulate your API’s resources by ensuring a customer stays within their agreed contract terms. Because you may have a variety of different API service tiers, quotas are usually dynamic for each customer, which makes them more complex to handle than short-term rate limiting. Besides quota obligations, historical trends in customer behaviors can be used for spam detection and automatically blocking users who may be violating your API’s terms of service (ToS).

Examples use cases for quota limits:

- Block intentional abuse such as sending spam messages, scraping, or creating fake reviews

- Reduce unintentional abuse while allowing a customer’s usage to burst if needed

- Properly monetize your API via metering and usage-based billing

- Ensuring a customer does not consume too many resources such as AI tokens or compute which impact your cloud spend.

- Enforce contract terms of service and prevent “freeloaders”

Identifier

Long term quotas are almost always calculated on a per-tenant or customer level. IP addresses won’t work for these cases because an IP address can change or a single customer may be calling your API from multiple servers circumventing the enforcement.

Scope

Because quotas are usually enforcing the financial and legal terms of a contract, it should be unified across all servers and be accurate. There can’t be any “guesstimation” when it comes to quotas.

How to implement rate limiting

Usually a gateway server like NGINX or Amazon API Gateway is the ideal spot to integrate rate limiting as most external requests will be routed through your gateway layer. Rate limiting ensures fair use of API resources by preventing any single user from monopolizing the system. For short term rate limit violations, the universal standard is to reject requests with 429 Too Many Requests. It is important to communicate rate limits to API consumers by providing clear documentation and feedback when they exceed request limits. Additional information can be added in the response headers or body instructing the client when the throttle will be cleared or when the request can be retried.

For long term quota violations, a number of different actions can be taken. You could either reject the requests similar to short term rate limiting, but you could also handle other ways such as adding an overage fee. An easy way to manage quotas are with Moesif’s API Governance features. A warning message can inform users of rate limit violations, such as ‘API Rate Limit Exceeded,’ indicating that their requests will not be processed until the rate limit resets. For long term quota violations, a number of different actions can be taken. You could either reject the requests similar to short term rate limiting, but you could also handle other ways such as adding an overage fee. An easy way to manage quotas are with Moesif’s API Governance features. This enables you to add rules that enforce quotas and plan limits with just a simple server integration or plugin with an API gateway in few clicks. Setting limits is crucial to ensure fair usage and prevent abuse, balancing system stability and user experience. Instructions on how to do this are below:

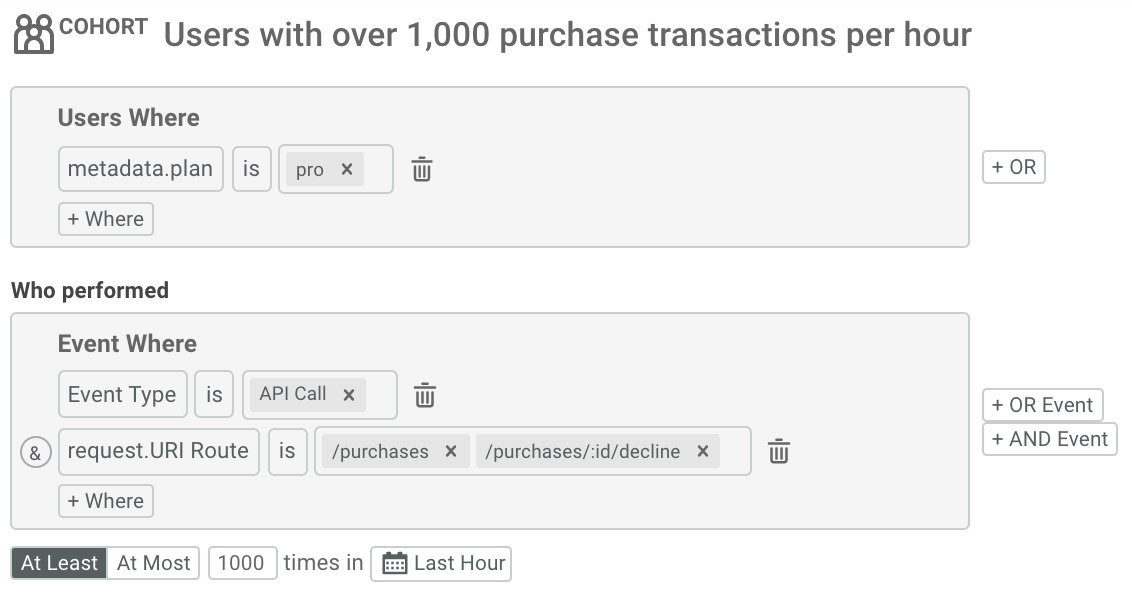

- Within Moesif, create a user cohort under the User Lookup tab. Add your criteria when a user is considered exceeding their quota. In this example, when a user makes more than 1,000

/purchasesor/purchases/:id/declinewithin an hour period.

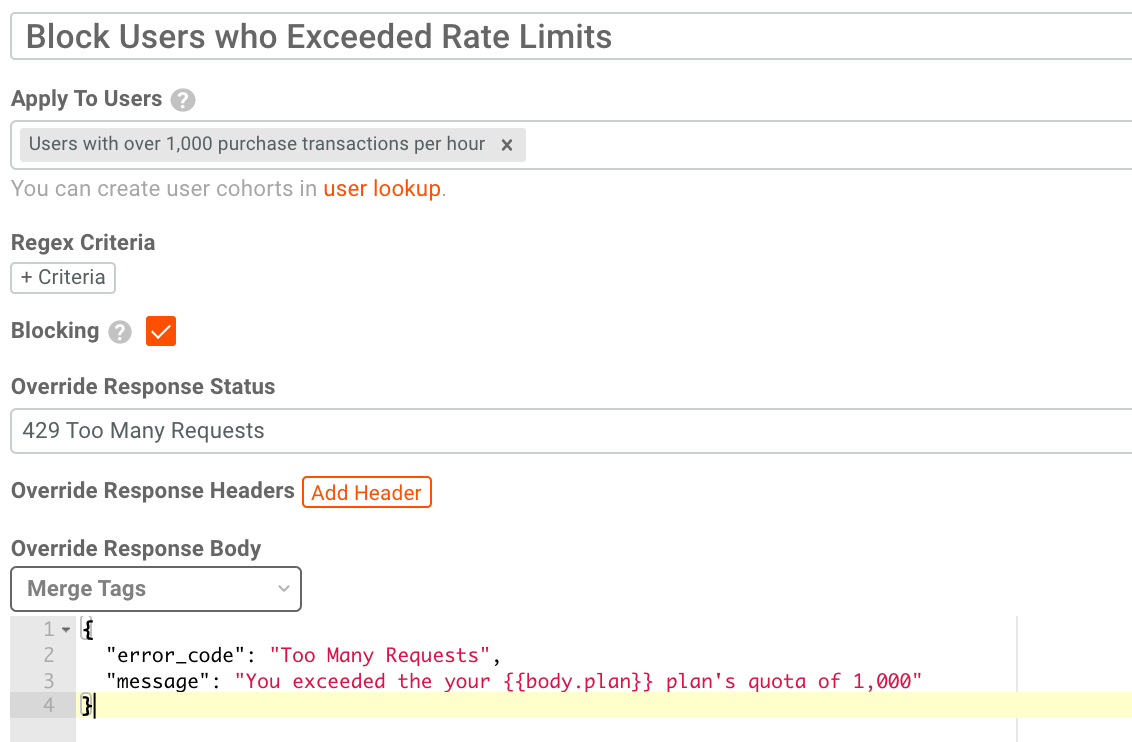

- Now that we created the cohort, go to API Governance under the Alerting & Governance tab. From here, create a new governance rule as shown below. In this case, we are short circuiting the request with the status code

429 Too Many Requests. We also provide an informational message on why the request is rejected.

Informing customers of rate limit and quota violations

Like any fault or error condition, you should have active monitoring and alerting to understand when customers are approaching or exceeding their limits/quotas, which can lead to rate limit errors. Your customer success team should proactively reach out to customers who run into these issues and assist them to optimize their integration. It is crucial to understand the given period for rate limits to prevent exceeding the allowed number of requests within this timeframe. Because manual outreach can be slow and unscalable, you should have a system in place that automatically informs customers when they do run into rate limits as their transactions are getting rejected which can cause issues in their applications.

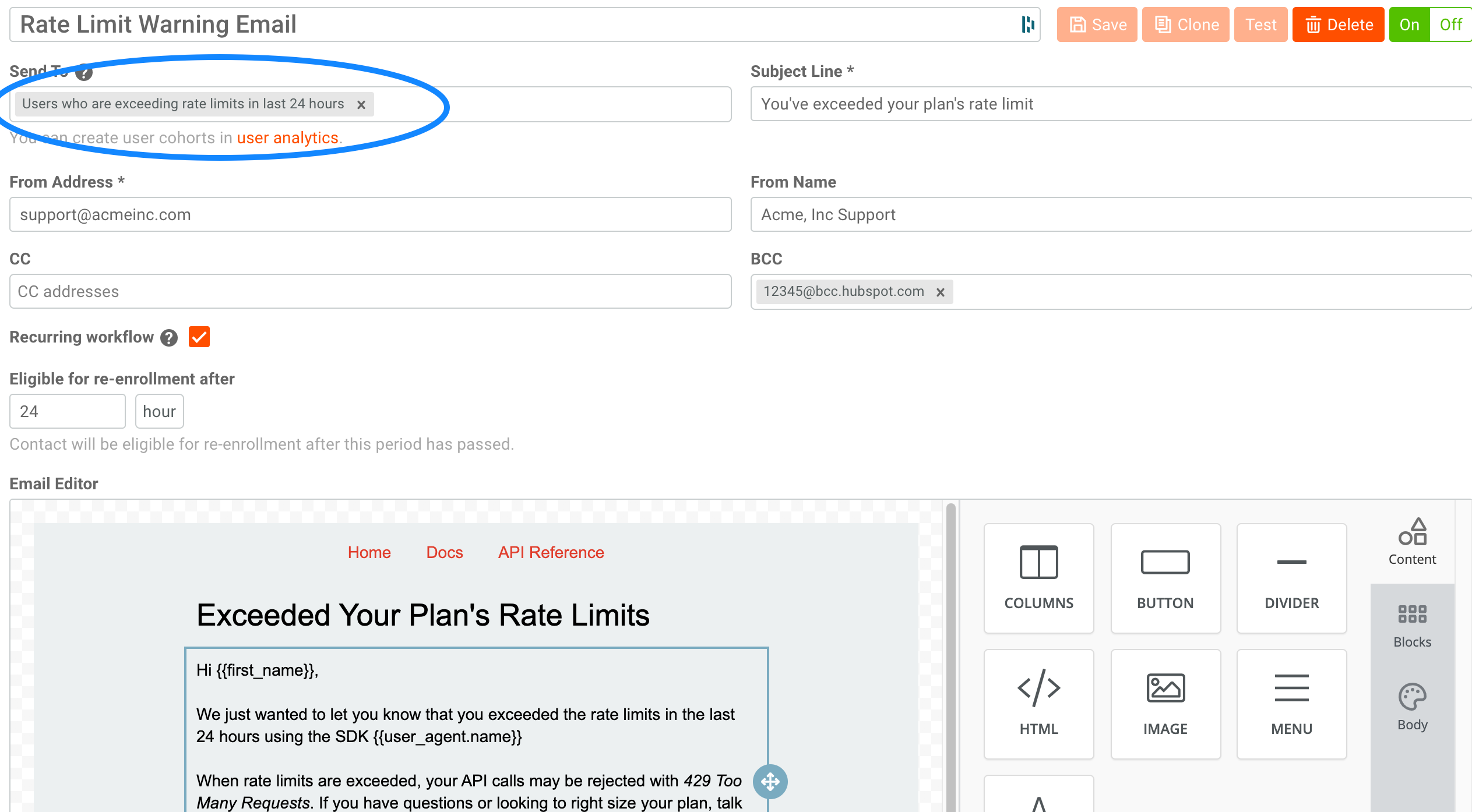

An easy way to keep customers informed of such issues is via Moesif’s behavioral email feature. Instructions on how to do this are below:

- Within Moesif, create a user cohort under the User Lookup tab. Add your criteria when to alert customers such as by looking at the number of API calls or when a rate limit header reaches a certain threshold. In this example, we add a filter

response.headers.Ratelimit-Remaining < 10

- Now that we created the cohort, go to Behavioral Emails under the Alerting & Governance tab. From here, create a new email template and design it to fit your requirements as shown below.

Rate limit remaining headers

Besides sending emails, it’s also helpful to inform the customer of any rate limit remaining using HTTP response headers. By configuring different limits for various user segments, headers can inform users of their remaining request limits. There is an Internet Draft that specifies the headers RateLimit-LimitRateLimit-Remaining and RateLimit-Reset.

By adding these headers, developers can easily set up their HTTP clients to retry once the correct time has passed. Rate limiting is crucial in preventing API abuse, as it helps protect systems from malicious attacks designed to overwhelm resources. Otherwise, you may have unnecessary traffic as a developer won’t know exactly when to retry a rejected request. This can create a bad customer experience.

Rate limit implementation errors and 429 too many requests

Even a protection mechanism like rate limiting could have errors itself. Algorithms ensure a consistent rate of requests, allowing for a steady flow of data regardless of input fluctuations. For example, a bad network connection with Redis could cause reading rate limit counters to fail. In such scenarios, it’s important to not artificially reject all requests or lock out users even though your Redis cluster is inaccessible. Your rate limiting implementation should fail open rather than fail closed meaning all requests are allowed even though the rate limit implementation is faulting.

Additionally, adjusting limits based on user behavior and data analytics is crucial. Regularly reviewing usage patterns and analytics helps make informed adjustments to rate limiting strategies, ensuring both system protection and user satisfaction. This also means rate limiting is not a workaround to poor capacity planning as you should still have sufficient capacity to handle these requests or even designing your system to scale accordingly to handle a large influx of new requests. This can be done through auto-scale, timeouts, and automatic trips that enable your API to still function.

Conclusion

Quotas and rate limits are two tools that enable you to better manage and protect your API resources. Enforcing rate limits is crucial for actively managing API requests, ensuring stability and fairness within the system. Yet, rate limits are different from quotas in terms of business use case. Algorithms like the leaky bucket ensure a steady flow of requests, allowing for smooth traffic management and avoiding overloads during bursts of requests. It’s critical to understand the differences and limitations of each. In addition, it’s also important to provide tooling such that customers can stay informed of rate limit issues and a way to audit 4xx errors including 429.