Using Moesif, AWS, and Stripe to Monetize Your AI APIs - Part 1: Integrating The Platforms

This is the first part of a four-part series about AI API monetization.

As the wave of AI sweeps through the technology landscape, many have hopped on board. Interestingly enough, and often overlooked, is that many AI capabilities are served through APIs. Fancy user interfaces integrate with the actual mechanisms where the magic happens: the APIs. So, when generating revenue through AI platforms, the APIs drive the revenue.

This leads to the challenge of controlling access to the APIs, metering API usage, and charging customers for said usage. The overarching term for this is API monetization, but unlike simpler forms of API monetization that only charge based on API calls, AI platforms generally tend to charge on things such as “tokens used” and other AI-specific metrics that are metered upon. Luckily, platforms exist that can expedite this process and make it simple to implement. We will focus on Moesif, AWS, and Stripe to implement API monetization for AI APIs.

You’ll learn to use each platform to fulfill a specific role in the setup:

- AWS Lambda will power the API backend logic.

- Amazon API Gateway will host the API, providing access control and API management.

- Then, you will integrate Moesif with AWS to add metering capabilities. This will allow us to send usage data over to Stripe. Stripe will take care of collecting payment or burning down balances based on usage.

Table of Contents

- The AI API

- Setting up AWS Lambda

- Setting up Amazon API Gateway

- Protecting the API with API Gateway

- Integrating Moesif and Stripe

- Conclusion

The AI API

In almost all cases, you will expose AI service through an API or leverage existing AI services that will power your API functionality. In our example, we will use OpenAI’s /chat/completions endpoint to demonstrate how to monetize an AI API. This provide an accurate depiction of an AI API. Many companies use OpenAI-compatible API Specifications to allow for drop-in replacement for OpenAI APIs.

We will create a single endpoint called /ai-chat that will function similarly to OpenAI’s chat endpoint. Well, exactly like it, since it will leverage the /chat/completions endpoint under the hood! The following two sections show how the request and response will look.

POST Request Payload

{

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello!"

}

]

}

Response Payload

{

"id": "chatcmpl-123",

"object": "chat.completion",

"created": 1677652288,

"model": "gpt-3.5-turbo-0125",

"system_fingerprint": "fp_44709d6fcb",

"choices": [{

"index": 0,

"message": {

"role": "assistant",

"content": "\n\nHello there, how may I assist you today?",

},

"logprobs": null,

"finish_reason": "stop"

}],

"usage": {

"prompt_tokens": 9,

"completion_tokens": 12,

"total_tokens": 21

}

}

For a complete reference for the API we will be using, see the OpenAI Chat Completions docs.

For our monetization efforts, we must accurately meter the usage. In that regard, only the response fields for prompt_tokens and completion_tokens concern us. These two fields give us the input and output token counts for the prompt and response, respectively. For the rest of the tutorial, you can either follow along using your own AI API or use the OpenAI Chat Completions one that we will be using throughout. Either works as long as you have a field in the response that outlines how many input and output tokens the query has consumed.

Setting up AWS Lambda

To set up the Lambda function, we assume you have the following prerequisites:

- An AWS account

- A user with administrative access

Step 1: Install the Dependencies

The Lambda function requires these dependencies:

Install these dependencies in a folder:

pip install --target ./package moesif_aws_lambda requests

Here we put these dependencies inside the package/ folder.

Step 2: Write the Lambda Function

Next, we write a Lambda function lambda_handler in a file lambda_function.py:

from moesif_aws_lambda.middleware import MoesifLogger

import json

import os

import requests

moesif_options = {}

@MoesifLogger(moesif_options)

def lambda_handler(event, context):

req_body = json.loads(event.get("body"))

headers = {"Authorization": os.environ["Authorization"]}

ai_res = requests.post(

"https://api.openai.com/v1/chat/completions", json=req_body, headers=headers

)

res_body = ai_res.json()

return {

"statusCode": 200,

"headers": {"Content-Type": "application/json"},

"body": json.dumps({"request_payload": res_body}),

}

This simple Lambda function performs the following tasks:

- It extracts the request body from the Lambda

eventobject. The request body contains the prompt we want to send to OpenAI, same as the examplePOSTrequest payload. - It sets the

Authorizationheader to the OpenAI API key. - It then sends an HTTP request to OpenAI API’s

/chat/completionsendpoint. - Finally, the function returns a response back to the client, containing the response from OpenAI API in the

body.request_payloadfield.

In a later section, you will set up AWS Gateway to create an API and integrate the Lambda function. When you send requests to your API, the Lambda function will capture the request data in the event object and process it.

Step 3: Prepare a Zip File Archive

To deploy the Lambda function with the dependencies, you need to package them into a zip file archive. Consider the following your current directory structure:

.

├── lambda_function.py

└── package/

Then you can create a zip file archive function.zip by executing these commands:

cd package/

zip -r9 ../function.zip .

cd ..

zip -g function.zip lambda_function.py

Step 4: Create the Lambda Function in the Console

- Go to the Functions page of the Lambda console.

- Select Create Function.

- Select Author from scratch.

- Fill out the Basic information section. Make sure you select a Python runtime and choose the 64-bit x86 architecture. This article uses the Python 3.12 runtime.

- Select Create Function.

Step 5: Add Environment Variables in Lambda

We will add two environment variables for the Lambda function:

- A

MOESIF_APPLICATION_IDenvironment variable. The Moesif AWS Lambda middleware expects this variable to be able to connect with your Moesif account and send analytics. - An OpenAI API key. You need to add this key in the

Authorizationheader in the formatBearer YOUR_OPENAI_API_KEY.

Follow these steps to add these environment variables:

- Go to the Functions page of the Lambda console.

- Select the function you’ve created.

- Select Configuration and then select Environment variables.

- Under Environment variables, select Edit.

- Select Add environment variable to enter key and value for an environment variable.

- Enter the keys and and values for the environment variables:

- For Moesif Application ID, set Key to

MOESIF_APPLICATION_IDand Value to your Moesif Application ID. - For your OpenAI API key, set Key to

Authorizationand Value toBearer YOUR_OPENAI_API_KEY. ReplaceYOUR_OPENAI_API_KEYwith your OpenAI API key.

- For Moesif Application ID, set Key to

- Select Save.

To get your Moesif Application ID, follow these steps:

- Log into Moesif Portal.

- Select the account icon to bring up the settings menu.

- Select Installation or API Keys.

- Copy your Moesif Application ID from the Collector Application ID field.

Step 6: Deploy the Lambda Function

If you’ve followed the preceding steps, you now have a zip file archive that contains your Lambda function code and its dependencies. Next, follow the instructions in AWS Lambda docs to upload and deploy your Lambda function code as a zip file archive.

Setting up Amazon API Gateway

To set up Amazon API Gateway, we assume you have the following prerequisites:

- An AWS account

- A user with administrative access

Step 1: Create a REST API

API Gateway offers several API types. But for our use case, create a REST API.

- Go to your API Gateway console.

- Select Create API.

- Under REST API, select Build.

- Select New API.

- For API endpoint type, select Regional. Fill out the rest of the fields as you need.

- Select Create API.

Step 2: Create API Resource

After creating API in the preceding step, your API contains only the root / resource. In the following steps, we create the resource /ai-chat.

- Go to your API Gateway console.

- Go to the Resources page.

- Select Create resource

- Enter

ai-chatfor Resource name. - Select Create Resource.

Step 3: Add API Method

Next, you must add an HTTP POST request method to the /ai-chat resource. This allows clients to send HTTP POST request to the /beta/ai-chat endpoint, where beta denotes the deployement stage of the API.

- Go to your API Gateway console.

- Go to the Resources page and select the

/ai-chatresource. - In the Methods pane, select Create method.

- For Method type, select POST.

- For Integration type, select Lambda function.

- Enable Lambda proxy integration.

- For Lambda function, select the Lambda function you’ve created in the preceding section.

- Select Create method.

Step 4: Deploy the API

With the resource and method in place, you can now deploy the API:

- Go to your API Gateway console.

- Go to the Resources page.

- Select Deploy API.

- Select *New stage* and then enter a stage name.

- Optionally, add a description of your API.

- Select Deploy.

After deployement finishes, the Stages page appears. In the Stage details pane, Invoke URL shows the URL to call your API.

For example, consider the URL https://abcdefgh12.execute-api.ap-southeast-2.amazonaws.com/beta where beta is the stage name. You can send POST requests to https://abcdefgh12.execute-api.ap-southeast-2.amazonaws.com/beta/ai-chat with the following body:

{

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello!"

}

]

}

The associated Lambda function takes the requst body and calls the OpenAI Chat Completions API. If you’ve followed the preceding instructions properly, you should see a response similar to the following:

{

"request_payload": {

{

"id": "chatcmpl-123",

"object": "chat.completion",

"created": 1677652288,

"model": "gpt-3.5-turbo-0125",

"system_fingerprint": "fp_44709d6fcb",

"choices": [{

"index": 0,

"message": {

"role": "assistant",

"content": "\n\nHello there, how may I assist you today?",

},

"logprobs": null,

"finish_reason": "stop"

}],

"usage": {

"prompt_tokens": 9,

"completion_tokens": 12,

"total_tokens": 21

}

}

}

}

Protecting the API with API Gateway

If you have followed along so far, you have a functional AI API that can generate appropriate responses to requests. In this section, we implement two basic security mechanisms to protect the API:

- A usage plan. This allows you to restrict rate or total number of requests a client can make to the API.

- An API key to control access to the API.

Step 1: Set Up a Usage Plan

Before you can an API key, you must first create a usage plan and associate it with a stage:

Create a Usage Plan

- Go to your API Gateway console.

- Go to the Usage plans page.

- Select Create usage plan

- Enter the name and an optional description for the usage plan.

- Enter the number of requests per second in Rate.

- Enter the number of concurrent requests in Burst.

- Lastly, enter how many requests a client can make to your API in a time period.

- Select Create usage plan.

Associate a Stage to a Usage Plan

- Go to your API Gateway console.

- Go to the Usage plans page.

- Select your usage plan.

- Go to the Associated stages tab and select Add stage.

- Selet your API and the stage.

- Select Add to usage plan.

Step 2: Add an API Key

Setting up an API key to protect access to your API consists of the following steps:

- Creating an API key for a stage of your API.

- Requiring API key on API

POSTmethod.

Create API Key

- Go to your API Gateway console.

- Go to the Usage plans page.

- Select your usage plan.

- Go to the Associated API keys tab and select Add API key.

- Select Create and add new key.

- Enter the name and an optional description for your API key.

- Select Generate a key automatically.

- Select Add API key.

Require API Key on Your API’s POST Method

After following these steps, anyone who wants to access the the API must include the API key in the x-api-key request header:

- Go to your API Gateway console.

- Select your REST API.

- Go to the Resources page.

- Select the method. In our case, for example, select the

POSTmethod for the/ai-chatresource. - Select the Method request tab and then select Edit.

- Select the API key required checkbox.

- Select Save.

- Select Deploy API and deploy API to the stage you have associated your usage plan with.

If you’ve followed the steps successfully, you will get the same response back from your API. Otherwise, you API Gateway throws 403 Forbidden status response.

Integrating Moesif and Stripe

In the preceding steps, we’ve successfully finished the following tasks:

- Set up the AI API.

- Integrate Moesif with AWS.

You should now receive API traffic analytics in Moesif for each call to the API. Since Moesif now receives details about API calls, we’re ready to calculate usage. After calculating usage, we need a way to report the usage to Stripe. Moesif integrates directly with Stripe very quickly.

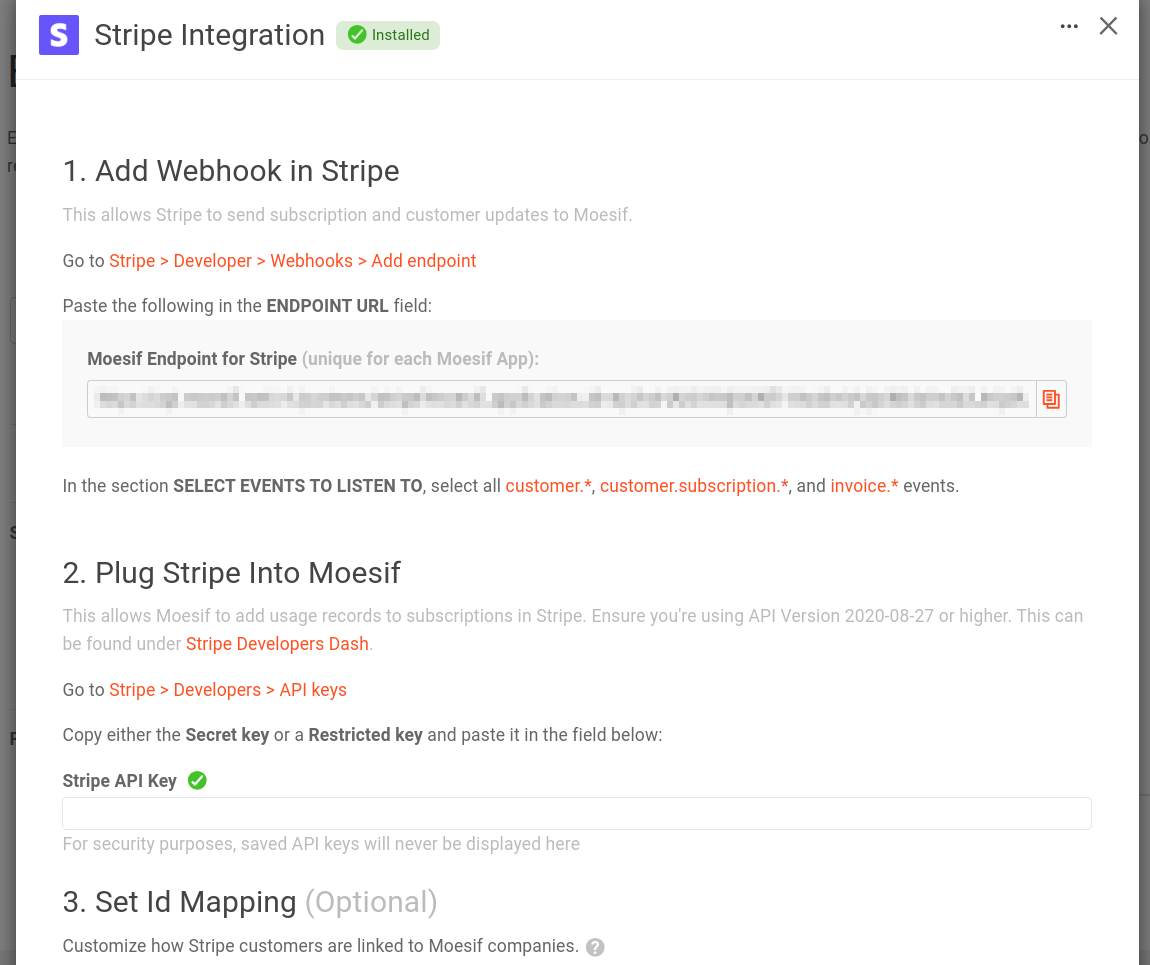

To configure Stripe in Moesif, follow these steps:

- Log into Moesif Portal.

- Select the account icon to bring up the settings menu.

-

Select Extensions.

a. Find the Stripe Integration in the extensions and then select Configure.

b. In the dialog that appears, follow the instructions for adding the Moesif Stripe webhook into Stripe.

![Stripe Integration dialog in Moesif]()

- On the Developers screen in Stripe, select Webhooks and then select Add Endpoint.

- Add the Moesif endpoint URL and select Select Events. Select all

customer.*,customer.subscription.*, andinvoice.events.*. Then, select Add Events. - Lastly, in the original Add Endpoints screen, select Add Endpoints.

-

Once you add Moesif endpoint URL as a Stripe Webhook, add your Stripe API key in the Stripe Integration dialog in Moesif.

a. In Stripe, go to the Developers screen and select API Keys.

b. Copy a private key for your API in either the Secret key or a generated Restricted keys pane on the screen. You can use either key.

c. In Moesif, paste the API key into the Stripe API Key field.

- Scroll to the bottom of the Stripe Integration dialog in Moesif and select Save.

You can optionally customize the ID mapping in Moesif. The default works fine for most purposes and this example uses the same. However, if you need to customize it, you can specify how to map the Stripe Customer objects to Company entities in Moesif. For more information, see Setting the Id Mapping for Stripe.

Conclusion

At this point, we have all of the wiring in place to begin monetizing APIs. The AWS Gateway instance can now proxy traffic to the upstream AI API. Moesif tracks that traffic, meters it, and reports to Stripe.

In the next part of this tutorial, we cover how to set up a Billing Meter in Moesif. Billing Meter will meter the usage based on the configuration we specify and then report that usage to Stripe. After this, we will also use Moesif’s Governance Rule feature to block users from accessing the API if they have run out of pre-paid credits.

Want to follow along as we build out this billing infrastructure to monetize APIs? Sign up for a free trial of Moesif and follow this article series for a step-by-step path for implementing API monetization. Until next time, stay tuned for the next part in this series on monetizing AI APIs!